RAID controller, Backplane and Chassis under OpenZFS

About forbidden fruits and a lot of hardware choices in ZFS systems.

How to use RAID controller and HBA with OpenZFS

In a previous article, we talked about how OpenZFS is both a filesystem and a disk/RAID manager at the same time. This usually imposes the question of whether or not we could use RAID controllers with our OpenZFS builds.

Can we use RAID controllers with OpenZFS/TrueNAS?

The short answer is: Yes. But, the more accurate question would be: Do you really need a RAID controller in your build or would a much cheaper, and less complicating, HBA adapter suffice?

There is a common myth amongst the OpenZFS community that RAID controllers are a forbidden fruit that should not be implemented in any OpenZFS build. This myth might have originated from the fact that ZFS can compose and handle its RAIDZ configurations just fine – without the need for extra hardware pieces. It might have also stemmed from FreeBSD’s poor support for some (actually most) of the available RAID controllers. After all, FreeBSD has always been on the conservative side when it comes to adopting newer technologies and driver availability for FreeBSD is much less than that of Linux.

If you factor in OpenZFS’s ability to utilise ARC, L2ARC, and SSD-based SLOG caches, then the need for a RAID controller diminishes even more.

What are the potential benefits of RAID controllers?

In general, RAID controllers can improve a server’s performance, and usable capacity, by:

- CPU offloading: by performing the RAID computations on-card, the system CPU will have more cycles to spend on other tasks, such as data encryption and deduplication.

- Caching reads and writes: this can improve HDD-based servers’ performance.

- SAS/JBOD expansion: Supporting an increased number of disks and/or external JBODs.

What to avoid when using a RAID controller with OpenZFS?

Based on my experience, one can use a RAID controller in an OpenZFS build on the following conditions:

- The RAID card should be one that is supported by FreeBSD/TrueNAS.

- The RAID card must support Standard RAID Levels and not use an uncommon proprietary RAID or partitioning schemes.

- Should the RAID card fail, you must be able to replace it with any available card – or directly plug in the disk into your mainboard/backplane to continue working.

- Do not use a controller with caching capability unless the cache is Battery-Backed.

- If the RAID controller offers on-chip encryption do NOT enable that feature.

- Avoid the controller’s CPU overheating.

CPU offloading is a feature available for RAID controllers, network adapters, cryptographic modules, etc. This is basically a form of Computation Offloading – sometimes also called Hardware Acceleration – where the RAID chip takes care of the computational burden of assembling files from the data shards saved on different disks, and vice versa. If you are not enabling CPU-intensive features in your build, such as encryption or deduplication, then maybe there is not much help a dedicated RAID controller with CPU offloading can offer in this regard.

When you connect your disks to a RAID controller you need to wipe and “initialise” them in order to create a RAID array from those disks. During this process the controller will add specific identifying data to a hidden portion of each disk to help mark it as a member of its RAID. Some RAID controllers use uncommon, proprietary, techniques to mark those disks and this would make it almost impossible for you to replace that card with another from a different manufacturer and, sometimes, you wouldn’t be able to replace that card with something from the same manufacturer.

For instance, I found that LSI SAS9341-4i and SAS 9361-4i cards are not interchangeable. If you initialise the disks on the 9341 you will not be able to move them to the 9361 without reformatting.

So, make sure any RAID card you opt for can be easily replaced by another.

The easiest test, is to remove the card and plug your disks into an HBA, or directly into the mainboard. If the OpenZFS/TrueNAS system recognises the drives you should be fine using that card.

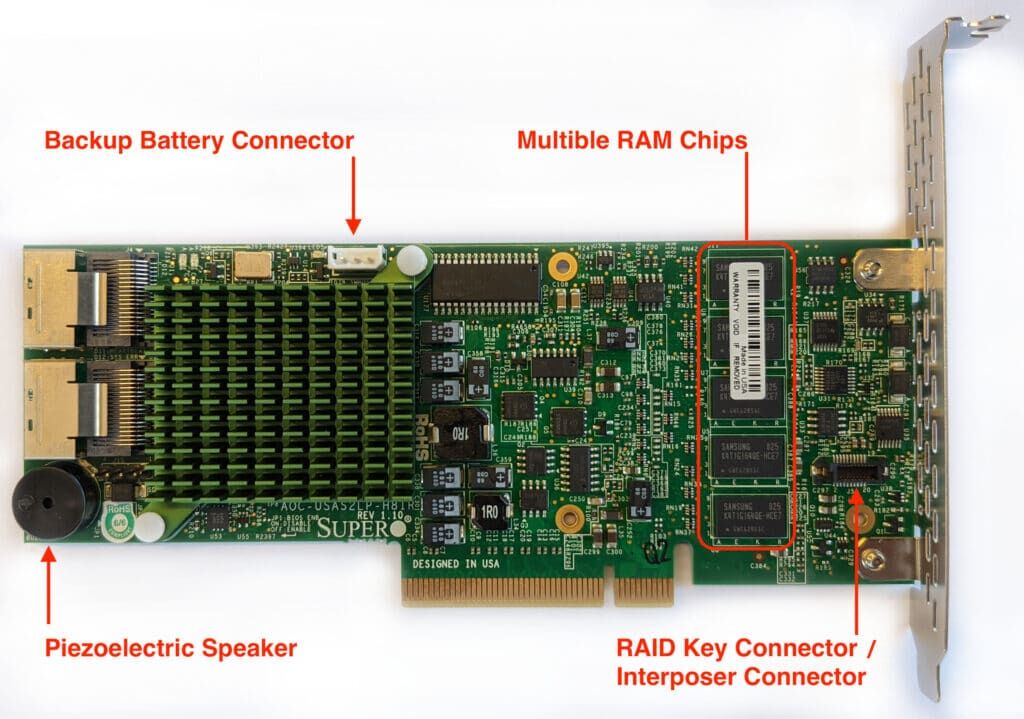

Caching reads and writes is where a good RAID controller can improve the performance – because RAID controllers use ECC RAM to cache disk IO. As mentioned before, losing read cache will not corrupt your data since the original data still resides on the pool. What can really cause problems is a power interruption or hardware failure of the card during a write operation. To shield yourself against that select battery-backed controller. Just like the PFP mechanism in an SSD disk, the battery’s function is to keep the cache circuitry (RAM chips) of the card active long enough for the writes to happen.

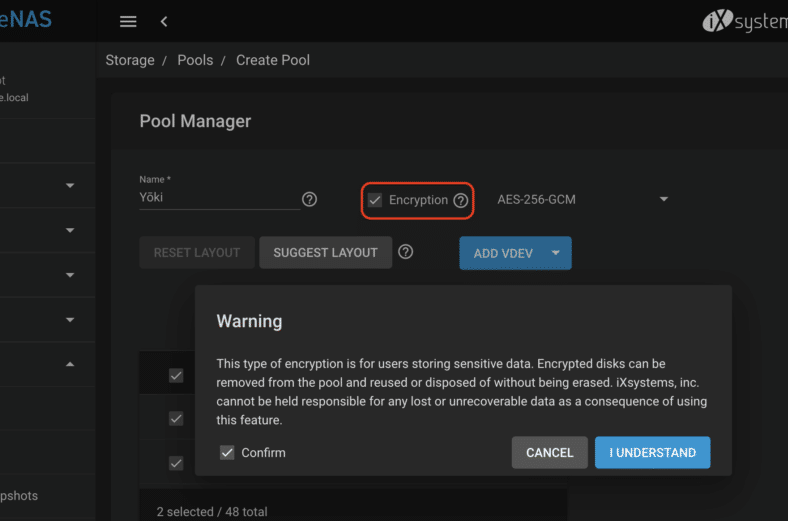

If your RAID controller offers on-chip encryption of the RAID member disks do not activate that feature. If you are storing sensitive data, or if you just want to enjoy the additional security data encryption can offer you, use the built-in encryption feature in TrueNAS Core. It is important to note that:

- Pool encryption can only be activated during pool creation.

- You must backup your encryption keys.

No one can help you recover your data if you lose them!

It could happen that your selected chassis can physically host a larger number of disks than that supported by your mainboard and/or backplane. Also, some RAID controllers come with external SAS ports that could be used to attach a JBOD enclosure to your server, allowing you to significantly increase your usable storage capacity without having to purchase additional complete servers.

Finally, avoid overheating. RAID (and HBA) controllers have their own CPUs and most of them come with just a basic heatsink attached. OpenZFS generates a lot of IO that will whip your RAID/HBA card CPU to its top clocks most of the time – especially during pool scrubbing and disk resilvering. This can introduce unnecessary downtime – in addition to the cost of a replacement card. I have witnessed the demise of some expensive cards that were deployed in poorly ventilated cases.

Use a well-ventilated chassis. If you have free PCI slots on your mainboard, try to space your cards apart for better airflow and to avoid creating heat traps between them. Sometimes, removing a couple PCI slot metal covers will help. If your card has only a heatsink, try to position the card right into the air stream of one of the chassis fans. It would be even better if you can attach a dedicated fan to the card.

Should I use a RAID controller with OpenZFS?

No. The fact that you can use one does not necessitate that you should use one. If you are not sure whether or not you should use a RAID card, the chances are you do not need one.

Most of the time you will be better off with a good SAS backplane or a passive, and reliable, HBA. If you already have a RAID card laying around, that can help you increase the number of disks you attach to your system, set it into JBOD mode, pass-through mode, or create multiple RAID0 containing a single disk each – provided the you have read and followed the “What to avoid” tips above. Do not use other on-chip RAID functions.

The reason is: You do not want to stack different RAIDs on top of each other, because none of them will be fully aware of what the other is doing. Using both the on-chip RAID and the software RAIDZ of OpenZFS will only complicate things for you, especially when troubleshooting problems.

Also, some RAID cards tend to supress S.M.A.R.T messages, which TrueNAS relies on to probe the disks and send you early system alerts notifying you of disk issues and possible disk failures.

What is IT-mode?

IT-mode is a short term for Initiator Target mode. When your RAID/HBA card is in IT-mode, it directly passes individual drives to the host system so OpenZFS can construct and manage its own RAIDZ arrays – without any interference from the on-card RAID firmware.

If your card does not come pre-flashed with an IT-mode firmware there is a good chance you can find an IT-mode firmware for it – especially if it is an LSI card. Cross-flashing LSI firmware is a relatively a simple procedure. Search the TrueNAS forums for guidance regarding your specific card.

What is a recommended RAID/HBA for a TrueNAS build?

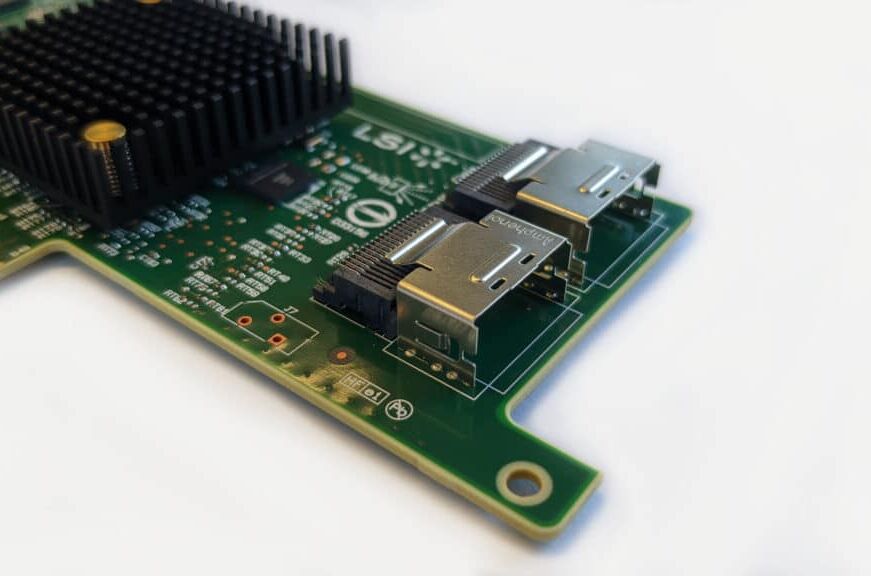

LSI adapters are the go-to for OpenZFS. They are proven to be robust with a brilliant driver and firmware support.

Depending on the type of disks you will use in your server you will most likely be choosing between two types of interfaces:

SFF-8087 connector | Older interface | supports SAS1/SAS2 (3.0 and 6.0 Gbps speeds)

SFF-8643 Connector | Newer interface | Supports SAS3 (12 Gbps)

Some modern LSI cards with the SFF-8643 Connector can also support NVMe/U.2 SSD disks, which makes them quite useful in builds where you intend to use L2ARC and SLOG devices.

Example model numbers:

For PCIe-2.0 boards, the LSI SAS 9211-8i/LSI 9220-8i

For PCIe-3.0 boards, the LSI SAS 9207-8i

For 12 Gbit SAS, the LSI 9305-8i/16i/24i SAS

Avoid older adapters such as the LSI 1068. Those adapters cannot support disks larger than 2 TB.

Why are we talking about older PCI-2.0 boards and adapters?

Because, for home and small office builds, those boards are still very usable and quite price economical.

The same applies for older PCI-2.0 adapters when using HDD SATA disks. For instance, the LSI 9211-8i can be had for as little at €50 and supports up to 8x drives. This card can handle anything your new 14TB SATA 3.0 HDD can push without a hiccup.

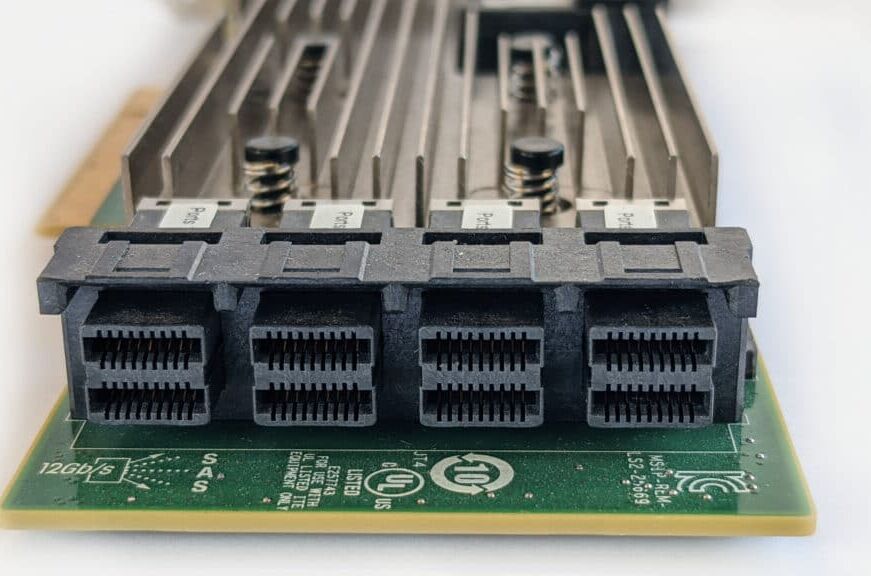

What does the “i” and “e” suffix, in the HBA name, mean?

Those suffixes indicate the number of internal and/or external devices supported by the card.

For instance, the LSI 9305-8i card can support up to 8 internal devices, while the LSI 9305-8i-4e card can support up to 8 internal devices + 4 external ones and the LSI 9305-4e can 4 external devices.

So,if you want an HBA that could support both internal drives plus an external JBOD, then make sure you choose accordingly.

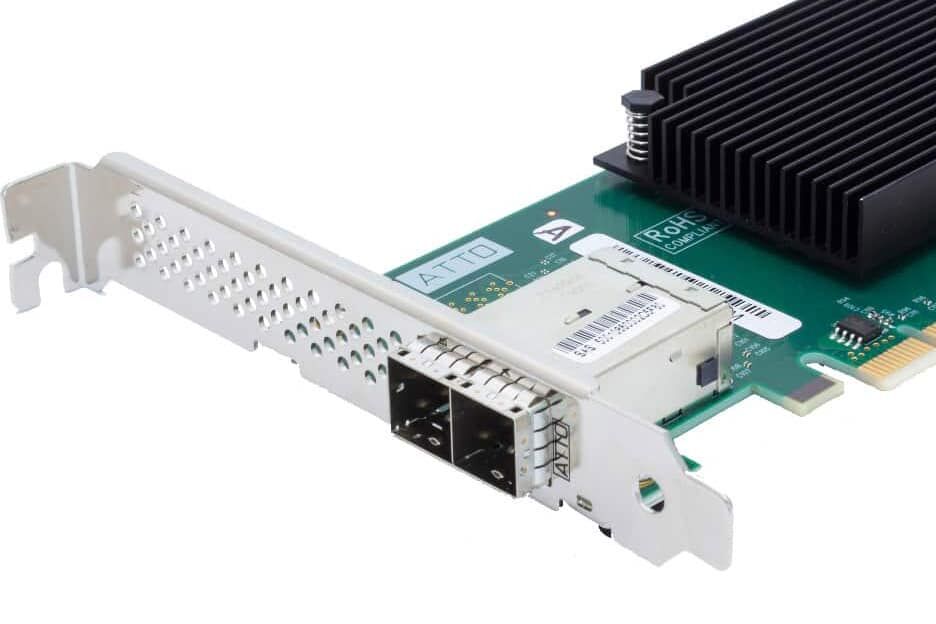

What is a recommended SAS adapter for attaching external JBODs and tape libraries?

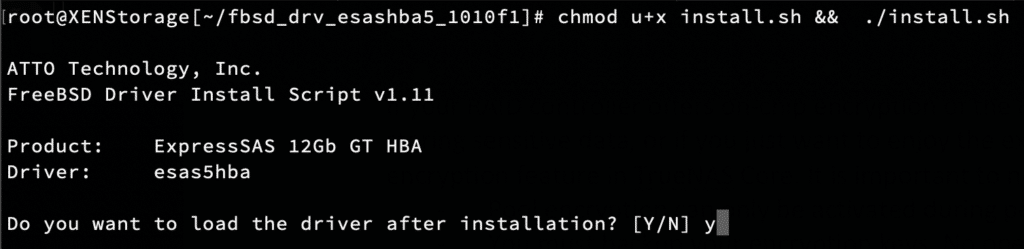

Again, LSI is your best choice for an HBA. Next comes ATTO HBA adapters. They have stable drivers and work well with external SAS JBODs and tape libraries.

The ATTO External SAS HBA

How to spot a RAID card

Mixing old/shelved disks into a JBOD is not always useful. Keep in mind that, within a VDEV, the capacity and the speed of that VDEV is tied to its smallest and slowest member.

- If you group 3x 8 TB disks + 1x 2 TB disk into the same VDEV you are practically using 4x 2 TB disks and you have just wasted away 18 TB of disk space.

- Also, if you group 3x 2 TB SAS disks + 1x SATA 2 TB into the same VDEV, you have just downgraded the SAS disks to SATA performance.

You will be better off unifying your drives.

Warning: When using external JBODs, it is advised to construct independent pools on them, instead of letting your pools span both the server and the JBOD. This is because if your JBOD shuts off or disconnects and your server stays on there is a high risk of corrupting the data on your pool.

If you use JBODs, make sure they have redundant PSUs and that both your server and your JBOD are sitting in a rack that can supply them with enough power, and that the rack is well protected by a good UPS.

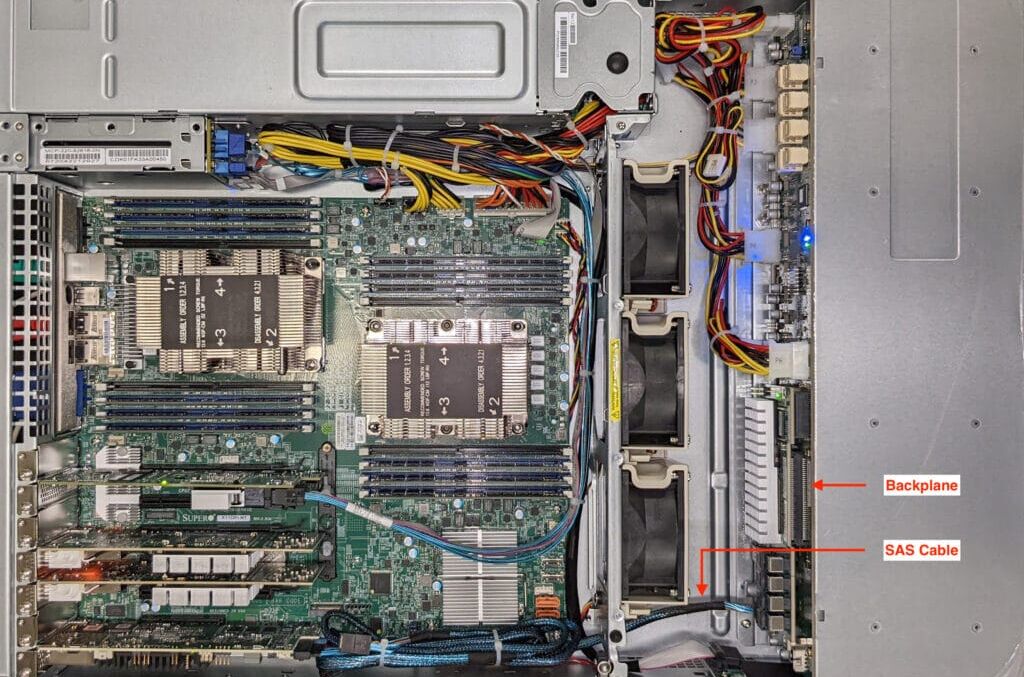

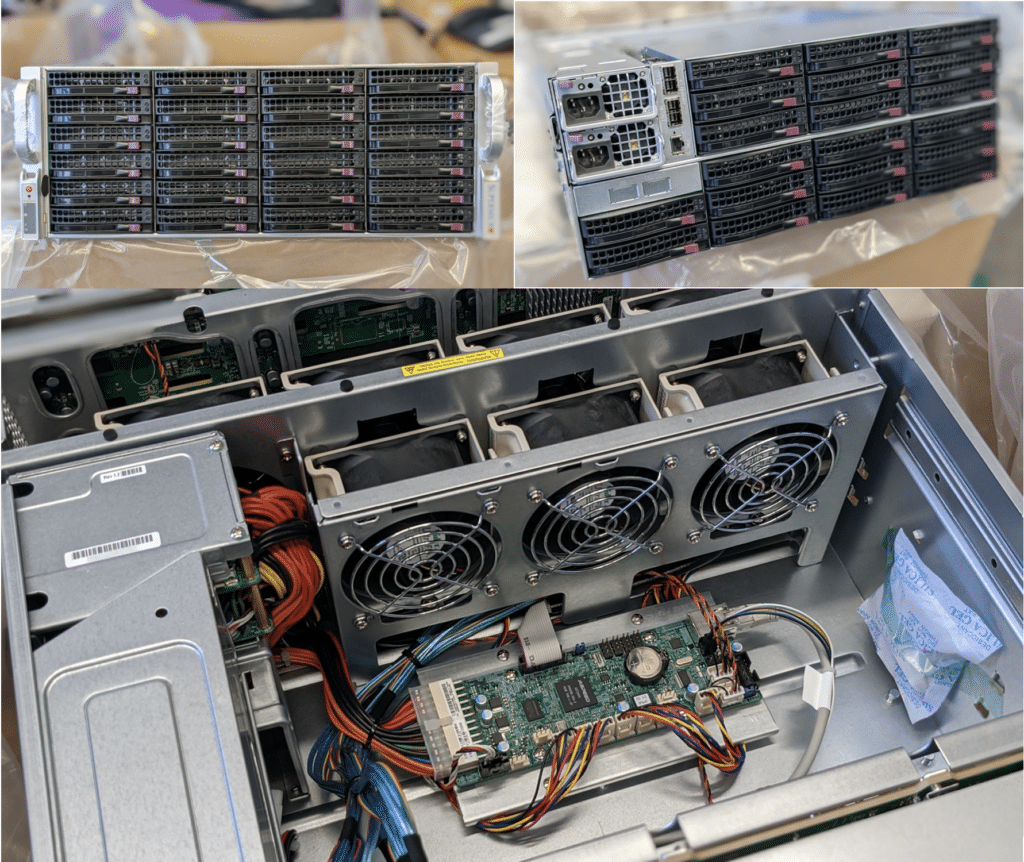

Front side, backside, and the inside of a Supermicro 4-bays JBOD. Note the tiny SAS controller inside, and the absence of a mainboard.

The Supermicro 825TQ SAS Backplane.

What are SAS Expander Backplanes?

A SAS expander backplane is a more intelligent backplane board, with a number of SAS connectors (i.e SFF-8640) that you can use to Expand the amount of disks you can connect to your HBA/RAID controller. It functions in a way similar to a network switch, by giving you more SAS ports to use, albeit it uses SAS as the communication protocol.

With a SAS expander you can connect more disks to a single HBA than normally attach to that HBA using direct cabling. It saves you money because you do not have to buy multiple HBAs or controllers to plug all your disks into the same box.

One downside of SAS expanders is that they have their own on-board I/O chips which, under high loads, can introduce a minute amount of latency to your disk I/O and slightly affect your server’s performance. This is worth mentioning, although it will not significantly impact the server performance.

Some SAS expanders, such as Supermicro’s SAS2-846EL2 have two expander chips, which allow for dual-port connectivity – thus offering a bit of protection against single-chip/single-port failure.

You should avoid SATA-only expanders, unless you absolutely know you will never want a performance upgrade. They offer you no chance of future upgrade to SAS drives, thus requiring the purchase of new chassis. Spend a little bit more and enjoy both better performance (remember: SAS is 12 Gbps) and future savings.

Not all backplanes have expanders in them. For budget builds, older backplanes such as the 846TQ should be OK. They are dummy passthrough boards and require a lot of cables to wire the drives to your HBAs.

Also, avoid the older 846EL2 SAS expanders, because they do not support disks larger than 2 TB.

What constitutes a good server chassis for my build?

A good chassis should:

- Be able to host your planned capacity

- Has redundant power supplies with enough power for the components and the drives

- Has good ventilation to keep your drives cool

- The backplane has an expander that supports your disk connector type (SATA/SAS/U.2 etc.)

For home servers, and where you can afford some downtime, you can get away with a single power supply.

For anything serious, use a chassis with redundant PSUs with a wattage rating that can cover your components consumption – plus some more.