The Starline ZFS FAQ

Understanding the basics of ZFS architecture

TrueNAS ZFS RAIDZ ZPool VDEV FAQ

Learn how advanced and reliable ZFS operates in our FAQ.

Understanding the structure of the ZFS architecture will help you achieve two important goals:

- Select the best hardware components for your TrueNAS storage server

- Configure your server to give you both the performant and the data security you aim for

Common POSIX filesystems serve one major purpose: retaining data, and its relevant meta-data, in a structured and accessible form. And to format any disk using a specific filesystem you need to use a volume manager (think fdisk, gparted, lvm, etc.) to create volumes (think partitions and RAID groups). Then you have to format those volumes into your preferred filesystem (FAT, EXT4, NTFS, XFS, etc.).

ZFS, on the other hand, is not just a filesystem, it is also a volume manager by and for itself. It can create volumes and assemble and manage its own software RAID structures – known as RAIDZ. This also eliminates the need for a hardware RAID controller. Actually, you are generally discouraged from using one – but this does not mean you cannot use one if you wish to. You just need to know which controllers you should use and in which way with ZFS. More on this point later on.

Let us now familiarise ourselves with some of ZFS important terminology such as ZPools, VDEVs, Datasets, Volumes, ARC, and ZIL.

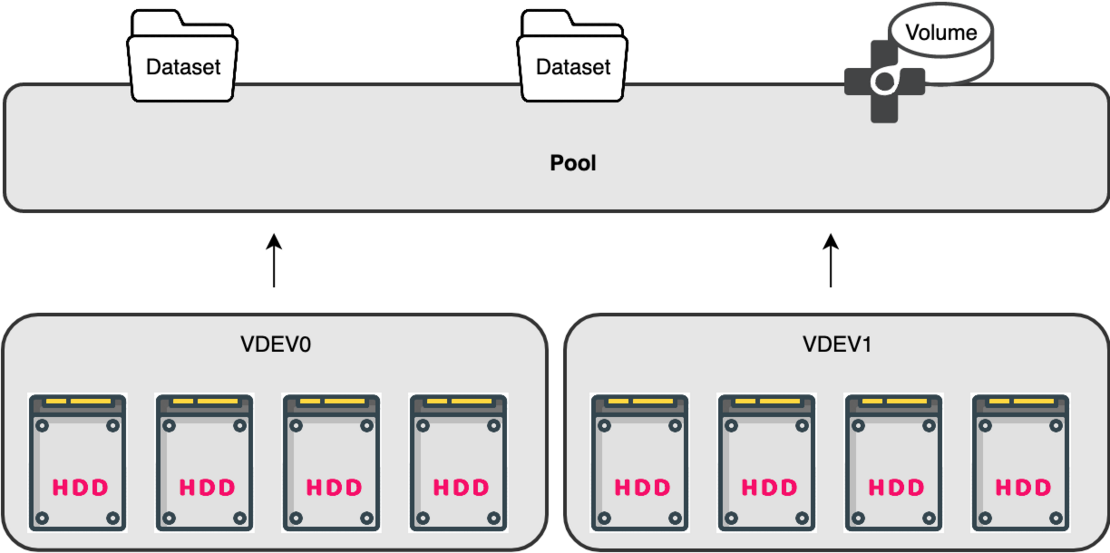

What is a ZPool?

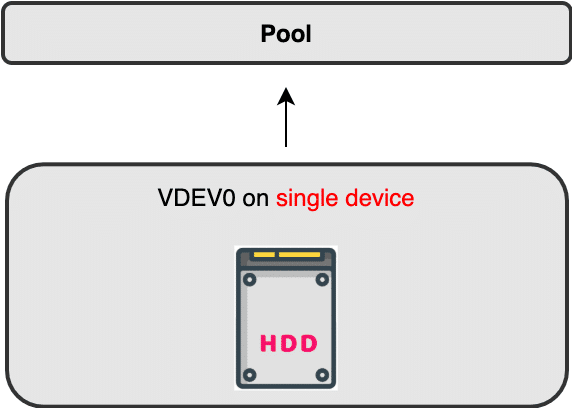

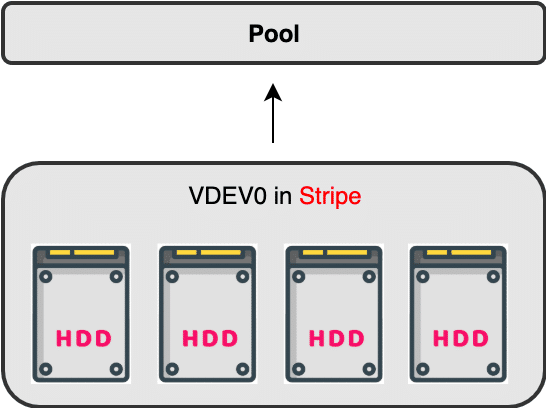

In any ZFS storage solution your data is saved to a ZFS pool (ZPool for short). This ZPool consists of one or more virtual device groups (VDEVs), those VDEVs consist of physical disks (devices). If there are more than one VDEV in the pool they will always be striped. This means that any disk you plug into your ZFS server must belong to a VDEV.

This also translates to two very important pieces of information:

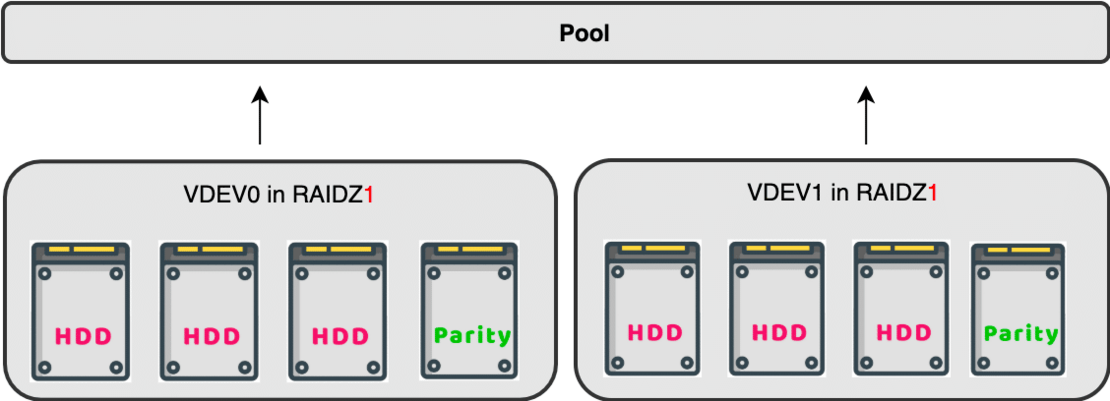

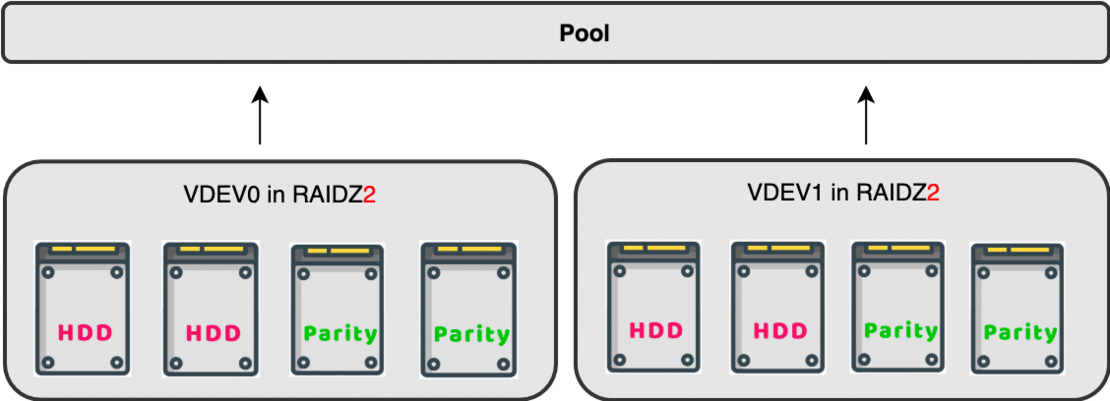

- In ZFS your redundancy happens at the VDEV level, not at the pool level.

- If you lose one complete VDEV, you lose your pool

A ZPool consisting of two striped VDEVs

During writes, data is distributed evenly across devices (disks) based on how much free space each device has. There is an exception to this rule and this is when ZFS knows it is best to save slightly more data to one disk because it fits better within the VDEV/pool. More on this later on.

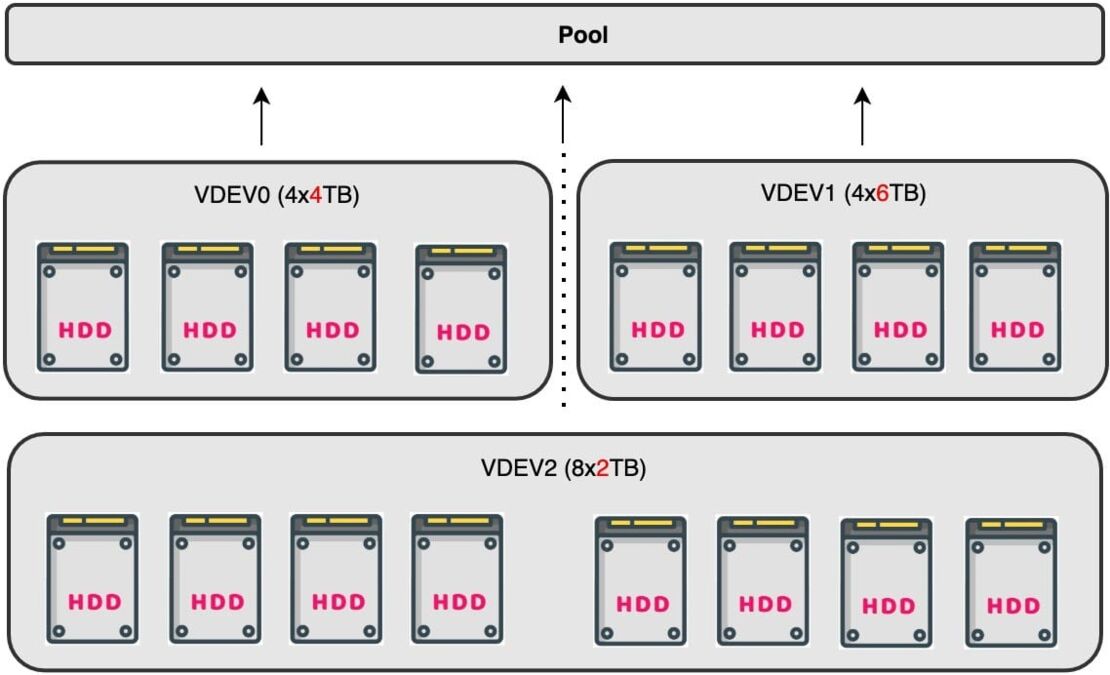

Can increase a ZPool capacity?

- You can increase a ZPool capacity by adding VDEVs or growing one, or more, VDEV.

- You can increase the capacity of any VDEV by replacing all disks within that VDEV.

This means: to double the capacity of a VDEV built with 4x 2 TB you need to replace all 2 TB disks one by one, and wait for ZFS to finish its recovery process (AKA resilvering). Once ZFS has finished resilvering the fourth disk you will be able to see the new capacity in your TrueNAS > Pools page.

But growing a pool is a one-way-process. You cannot remove a VDEV from a ZPool or decrease its capacity.

A ZPool consisting of mismatching VDEVs

What are VDEVs? And which VDEV types does ZFS support?

VDEVs are ZFS Virtual Devices built by grouping one or more physical disk in a ZFS-compatible VDEV type. VDEVs can be treated as disks. You can stripe and mirror them to form a ZPool. ZFS supports seven different types of VDEVs. VDEV can be any of the following:

| Physical Disk | Can be a HDD, SDD, PCIe NVME, etc. |

| File | An absolute path to a pre-allocated file or image |

| Mirror | A standard software RAID1 mirror |

| ZFS software RAIDZ1/2/3 | Non-standard Distributed parity-based software RAID |

| Hot Spare | Disks marked as hot spare for ZFS software RAID |

| Cache | A device for ZFS level 2 adaptive read cache – known as L2ARC |

| Log | A separate log device (SLOG) for ZFS Intent Log (ZIL)* |

*By default, the ZIL is written on the same data disks in the ZPool.

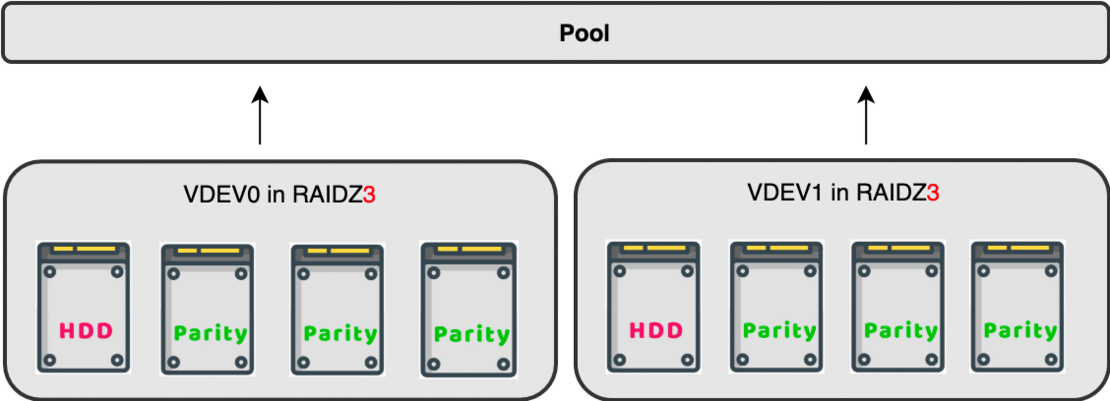

What is RAIDZ?

RAIDZ is a non-standard software RAID that ZFS can use to construct VDEVs using available devices. By non-standard we mean it is ZFS-specific and does not conform to other common software RAID standards.

RAIDZ supports the following three levels of RAID:

| RAIDZ Level | Equivalent to | Parity Disks | Disk loss tolerance | Speed |

| RAIDZ1 | RAID5 | One | -1 | fastest |

| RAIDZ2 | RAID6 | Two | -2 | slower |

| RAIDZ3 | RAID7 | Three | -3 | slowest |

Which RAIDZ is faster? Which RAIDZ is safer?

From the table above you can see that while RAIDZ3 is the safest with a disk-loss tolerance of up to -3 disks it is also going to be the slowest of them, because triple parity bits must be calculated and written to the devices.

RAIDZ3 is also the least space efficient because 3 disks are be used for parity.

RAIDZ1, on the other hand, is the fastest and the most space efficient of the three. But it is also the most dangerous.

RAIDZ2 is a balance in between. It offers higher performance than RAIDZ3 and a better protection than RAIDZ2. It is the setup we usually recommend to our ZFS/TrueNAS customers.

From our experience we can only advise against RAIDZ1. In spite of its relatively fast performance, it offers the worst data protection of the three. If you are so unlucky as to lose one more disk during a pool recovery process (resilvering), there is a good chance of losing your entire pool.

* Usable capacity of any ZPool is determined by the underlying VDEVs structure.

Can I use RAIDZ1 if I have backup of my data?

Only if you have a watertight and contentious backup plan. TrueNAS Core supports both replication and cloud backup to various storage providers.

Please keep in mind that if your daily operations rely on the immediate availability of your data then replication (having two identical primary and secondary servers) is the way to go. Cloud backup will translate to downtime since you still have to retrieve the data and make it available throughout your network.

What is a Hot-Spare?

A hot-spare is a device that could be used to replace a failed or faulty device in a ZPool. A hot spare device is inactive in a pool until you instruct ZFS/TrueNAS to use it to replaces the faulty device. Hot-spares are not a must, but they are highly recommended for mission-critical servers because they help shorten the overall recovery time. If you have a spare disk ready

Just keep the two following points in mind:

- Hot-spares do occupy server slots and this will affect the usable capacity of your server.

- Spare disks must be identical to, or larger than, the disks they will replace.

Hot-spares themselves do not offer any data redundancy. They are a static (sleeping) member of the ZPool that does not contribute neither to the storage capacity nor to the performance. They are there just in case a device might fail. They are also most useful when you want to initiate the recovery process remotely – since the spare is already plugged into the chassis.

With that in mind, we advise you not over stuff your server with hot-spares, because every spare disk you plug in replaces a disk that you can actually use to store your data. You also still have your VDEV layout (RAIDZ2, RAIDZ3) Mirroring, etc.) to cover your back.

Also, consider buying your spares with your new server – especially if you cannot wait for back-orders and couriers.

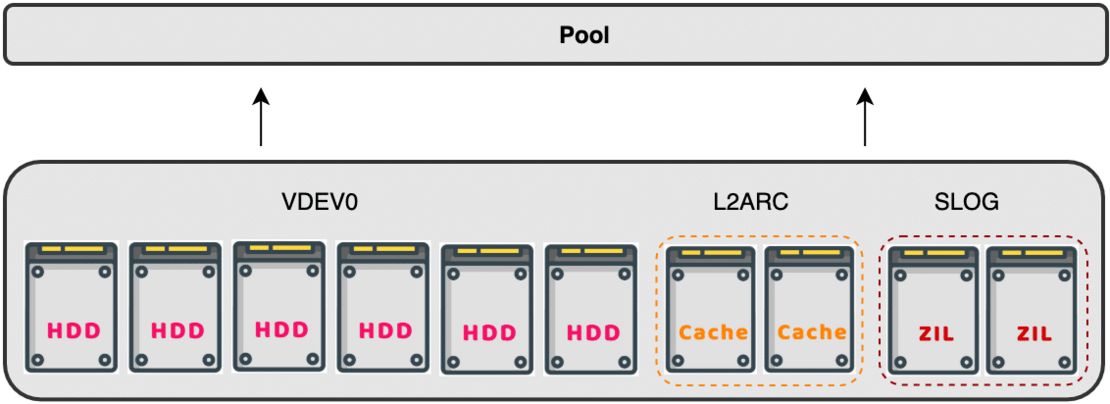

What is a Cache device?

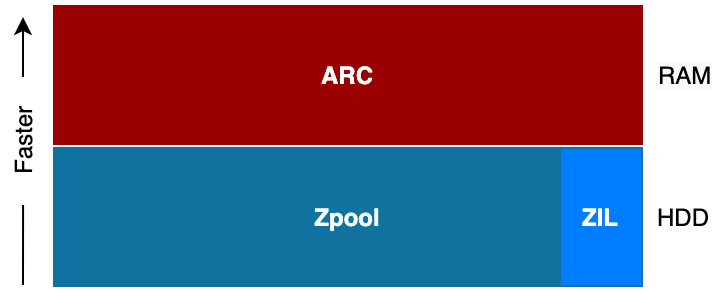

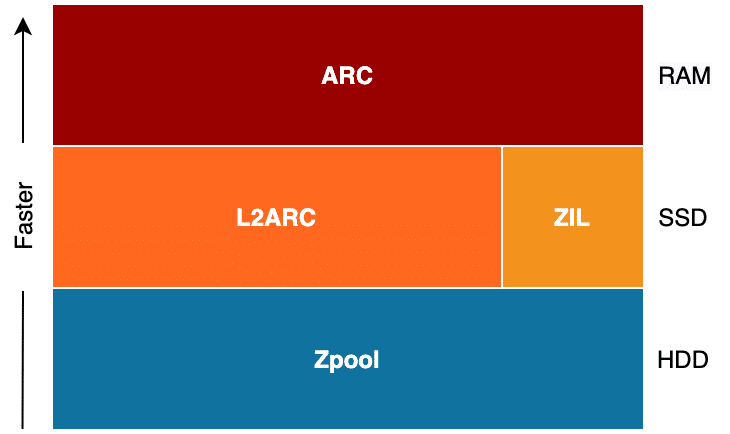

By default, ZFS uses system RAM to cache the read data blocks. This helps accelerate secondary client data requests because ZFS can then serve them directly from the RAM instead of going back to the pool disks. This primary cache is known as the Adaptive Replacement Cache (ARC) and it always lives in the RAM.

Although it is always advised to add more physical RAM modules when you want to increase the amount of cached reads, sometimes you might want to add a Level-2 Adaptive Replacement Cache (L2ARC) to your system, especially when you run out of RAM slots, when RAM is too expensive to obtain, or when you have some blazing fast NVMe drives to use as L2ARC.

Keep in mind that increasing the amount of system physical RAM is always better than using a L2ARC.

What is ZIL? And what is a SLOG device?

ZIL keeps a special log called the ZFS Intent Log (ZIL). This is where ZFS logs synchronous operations before it is written to the ZPool array. A synchronous write is when a client sends a piece of data to ZFS to be written and waits for an acknowledgement signal (ACK) from ZFS that the data has been written to the ZPool disks before it could send any more data. Although this provides better data safety, but it relatively slows things down, especially when your ZPool is built on top of slow spinning disks.

To help speed things up, and avoid data loss in case of power interruption, ZFS does not use the RAM to cache writes, instead it uses faster flash drives as persistent storage for write cache. This cache accelerates writes by, temporarily, hosting the data on a persistent medium while it gets written to the ZPool’s backing disks, allowing a faster acknowledgement to be sent back to the client and decreasing the time to the next patch of data to be sent out.

A diagram of a ZPool with the ZIL integrated into the pool’s disks

A diagram of a ZPool with separate flash ZIL and L2ARC disks

Finally, we strongly advise you to mirror your SLOG devices. This helps protect from data loss caused by sudden single SSD device failure. Using enterprise-grade NVMe, with Power Failure Protection (PFP) it a must for any mission critical ZFS server. Enterprise hardware has higher manufacturing standards, can survive years of 24/7 operation, comes with extended warranty, and has a significantly higher TDWPD value – compared to consumer-grade hardware.

Examples of structured ZPools and VDEVs

ZPool with separate NVMe devices for L2ARC and ZIL

TL;DR

ZPools and VDEVs

- ZPools are created using virtual disk groups (VDEVs) which in turn are built using disks (devices)

- If a ZPool contains more than one vdev, the VDEVs are striped.

- A ZPool capacity is the sum of the capacities of its underlying VDEVs – depending on internal VDEVs structure (RAIDZ type, Mirroring, Striping, etc.).

- You can create a ZPool using a JBOD.

- The capacity of any VDEV = The number of disks X The capacity of the smallest disk – Parity.

- You should create a ZPool using VDEVs of the same size (built using identical disks).

- You can grow a ZPool by adding more VDEVs

- You cannot remove a VDEV from a ZPool.

- You can grow a VDEV by replacing all member disks one by one and resilvering the pool.

- Resilvering is the process of ZFS pool rebuild after a disk replacement/upgrade.

- You cannot remove a disk from a VDEV.

- ZPool redundancy is defined at the VDEV level.

- If you lose a complete VDEV you will lose your pool.

- There are three levels of RAIDZ: 1,2, and 3. The digit represents its parity redundancy.

- Do not use RAIDZ1. It offers the worst protection level.

- Again, do not use RAIDZ1.

TrueNAS

- You can have multiple pools within your TrueNAS Core server.

- The best pool setup is a middle-ground between capacity, performance, and data protection.

- The usable capacity of your ZPool is determined by underlying VDEVs structure.

- TrueNAS Core supports both replication and cloud backup.

- Replication is having one primary TrueNAS server clone its contents on a secondary server. It is preferred that both servers are hardware-identical, but it is not a must.

- A hot-spare is a device that could be used to replace another faulty device in a ZPool.

- ZFS has two levels of read cache: ARC and L2ARC

- ARC lives in the RAM.

- L2ARC can be fast NVMe or SSD.

- It is always best to add more physical RAM than to add L2ARC.

- ZFS has Write Intent Log (ZIL) where ZFS logs synchronous operations before it is written to the ZPool array.

- ZIL, by default, resides on the same disk where the data is stored.

- To improve synchronous write operations ZFS can use separate devices for the ZIL log. Those are usually fast NVMes/SSDs. Because they are separate they are called SLOGs.

- SLOG devices do not have to be BIG, but they must be resilient.

- You must mirror your SLOG devices to protect against single device failure.

- You should stripe your L2ARC devices to improve performance.